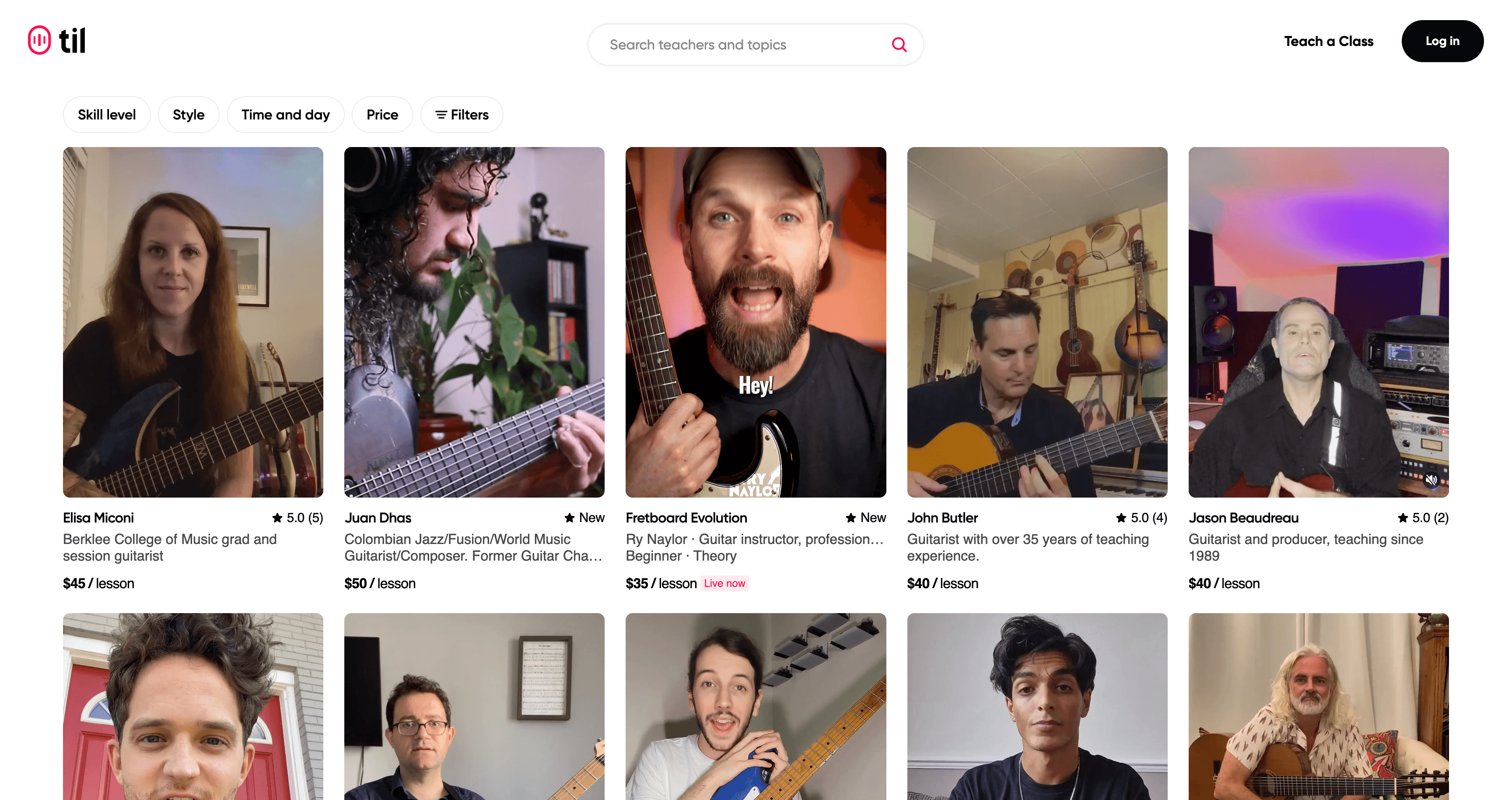

Building a Lightning-Fast Search Experience: Mistakes, Breakthroughs, and Performance Wins

Our search page had a serious problem: 11+ seconds to load content—a conversion killer for our guitar lesson marketplace. Users were dropping off before they could discover our amazing teachers, making this a top-priority challenge for our team.

Our mission wasn't about incremental improvements—we needed a complete transformation. The goal was ambitious but clear: create a lightning-fast, TikTok-inspired browsing experience that would keep guitar enthusiasts glued to their screens as they discovered new teachers.

What started as a straightforward architecture project turned into one of the most educational experiences of my career, filled with unexpected roadblocks, surprising discoveries, and performance wins that ultimately cut our load time by 66% and dramatically boosted user engagement.

The Challenge

With this project being a complete overhaul, making the right architectural choices from the outset would be critical to our success. The wrong decisions early on could lead to performance bottlenecks that would be difficult to solve later.

Creating a fast, engaging search experience meant nailing several key aspects:

- Instant initial loading: Users needed to see content immediately upon arrival.

- Responsive filtering: Adjusting filters had to feel snappy, with no perceptible delay.

- State persistence: All searches and filter combinations needed shareable URLs.

- Optimized video playback: As a video-heavy platform, we needed efficient loading of lesson previews to avoid overwhelming the network and slowing down the site.

- Smooth transitions: The entire experience needed to feel cohesive and fluid.

Having worked on similar projects at Til, I already knew we'd be leveraging Mux for video streaming and had experience with Next.js's Full Route Cache for initial data fetching—both of which would give us significant performance gains.

However, two main concerns kept me up at night:

- Server-side uncertainty: With the recent shift toward server components in React and Next.js's push for server-side rendering, I wasn't entirely confident about the best way to balance server and client responsibilities for a feature as interactive as search.

- Cohesive adoption: Building an isolated feature is one thing, but creating a system where server-side rendering, client-side interactivity, and URL state management all work together seamlessly? That was the real challenge.

As the engineer leading this project end-to-end, I knew that if I wanted to deliver on time, I needed to figure this out ASAP.

Architecture Planning

Given our performance requirements, I mapped out an approach that would address each key challenge:

- Initial data loading: Leverage Next.js's full route cache to pre-render pages with all lesson data at build time, essentially eliminating database queries for repeat visitors.

- State persistence: Use URL parameters to maintain state across page reloads and enable shareable links.

- URL parameter handling: TBD - I hoped to integrate nuqs, a type-safe URL parameter library I'd used successfully in previous projects

- Filter logic: TBD — needed to evaluate client-side vs server-side filtering based on our catalog size and performance targets.

- Video streaming: Continue leveraging Mux for efficient video delivery with optimized lazy loading.

This approach seemed promising, but I wasn't ready to commit without validation. I've learned through experience that a blend of independent thinking and learning from others usually provides the best outcomes.

Finding Architectural Inspiration

In my earlier years as a developer, I often designed systems and dove straight into implementation without much external validation. That approach led to more than a few rabbit holes and reinvented wheels. These days, I try to be more deliberate about research before committing to an implementation path.

Back to the project, initial searches for "building a filtering system with Next.js" yielded surprisingly generic results. However, a Twitter search led me to Aurora Scharff's excellent article on Managing Advanced Search Param Filtering in the Next.js App Router.

This finding was critical—we had already mastered Mux for video streaming and leveraged Next.js's full route cache in other projects. The URL parameter handling was the missing piece for a cohesive solution.

Architectural Adaptation

With the latest trends pushing functionality to server-side rendering, and nuqs being compatible with server components, conventional wisdom suggested filtering data at the database level was the right call. After all, that's typically the most efficient approach for large datasets.

However, our catalog consisted of only about 100 classes. Adding database roundtrips for each filter change would introduce unnecessary latency. Instead, I opted for client-side data filtering—a decision that would prove crucial for performance.

To recap my revised approach:

- Server-side rendering with full route caching for the initial data load

- Client-side filtering for instant UI feedback without server roundtrips

- Robust URL parameter management for state persistence and shareability

Building the Solution

Now that I had a clear architectural direction, it was time to implement each component of our solution.

Server-Side Rendering & Caching

For the initial data load, we leveraged Next.js's full route cache to eliminate database queries for repeat visitors. This approach pre-renders the page with all lessons at build time and serves the cached version to users:

// search.tsx

export const revalidate = 172800 // 48 hours

async function SearchPage() {

// This data fetch happens at build time and is cached

const classes = await fetchAllClasses()

return <ClientSideSearch initialClasses={classes} />

}

With a 48-hour revalidation period and the ability to trigger manual cache invalidation when new lessons were published, we ensured content stayed fresh without sacrificing performance.

Implementing Type-Safe URL Parameters

Following Aurora's approach, I implemented a streamlined version of nuqs for our search parameters:

// nuqs-parser.ts

'server-only'

import {

parseAsString,

createSearchParamsCache,

parseAsArrayOf,

parseAsInteger,

} from 'nuqs/server'

// Parser configuration for our key parameters

export const searchParsers = {

// Basic search query

q: parseAsString.withDefault(''),

// Array of music styles (jazz, rock, blues, etc.)

styles: parseAsArrayOf(parseAsString).withDefault([]),

// Maximum price filter without a default

maxPrice: parseAsInteger,

}

// Create a server-side cache for the search parameters

export const searchParamsCache = createSearchParamsCache(searchParsers)

Then, I created a custom React hook for components to easily access and update these URL parameters:

// use-search.ts

'use client'

import { useQueryStates } from 'nuqs'

import { searchParsers } from '../utils/nuqs-parser'

export function useSearch() {

return useQueryStates(searchParsers, {

shallow: false,

})

}

This simplified approach provided several advantages:

- Type safety across the entire application

- Shareable URLs that preserved filter state

- Consistent defaults for core parameters

- Serialization handling for complex types like arrays

Client-Side Filtering

With our data loaded upfront and cached, implementing client-side filtering was relatively straightforward. Most filters were handled with simple JavaScript operations on our cached dataset.

For our search query functionality, we continued using Fuse.js, which was already implemented in our codebase. This fuzzy search library was treated differently from other filters, allowing for more forgiving text matching that could handle typos and partial matches.

This approach meant that when users adjusted filters, the UI responded instantly without any network requests or server round-trips—creating that "snappy" experience we were aiming for.

Video Streaming Optimization

We had been using Mux for video streaming since day one, which gave us a solid foundation for the search page redesign. Mux's player component provided several important features for performance:

- Chunked loading: Videos are broken into segments to stream efficiently

- Adaptive bitrate: Quality adjusts based on network conditions

- Lazy loading: Videos can load only when needed

For our TikTok-inspired interface, the ability to lazy-load videos was crucial to prevent excessive network requests. Their component allowed us to defer video initialization until the component entered the viewport.

Mistakes and Learnings

Like many engineering projects, the path to a performant search page wasn't as smooth as I'd initially expected. What seemed like a clear architectural plan on paper quickly became a messy debugging adventure once we started building preview branches.

Performance Bottlenecks

I have to be honest, I was really confident in our page's performance from the outset. This was essentially a greenfield project—a complete rewrite of our search interface without any inherited overhead or bloat. We were caching data, parallelizing queries, and implementing all the best practices I could think of.

However, this confidence was quickly shattered when I started creating preview branches. All of a sudden, my excitement to wrap up and ship the project turned into genuine confusion as I watched the performance metrics roll in.

Despite all the optimizations—reduced server load with caching, minimized client-side data transfer, lazy loading for videos, parallelized backend queries—our search page was just as slow as it was before. Granted, it looked amazing with its TikTok-inspired interface. But it was still painfully slow.

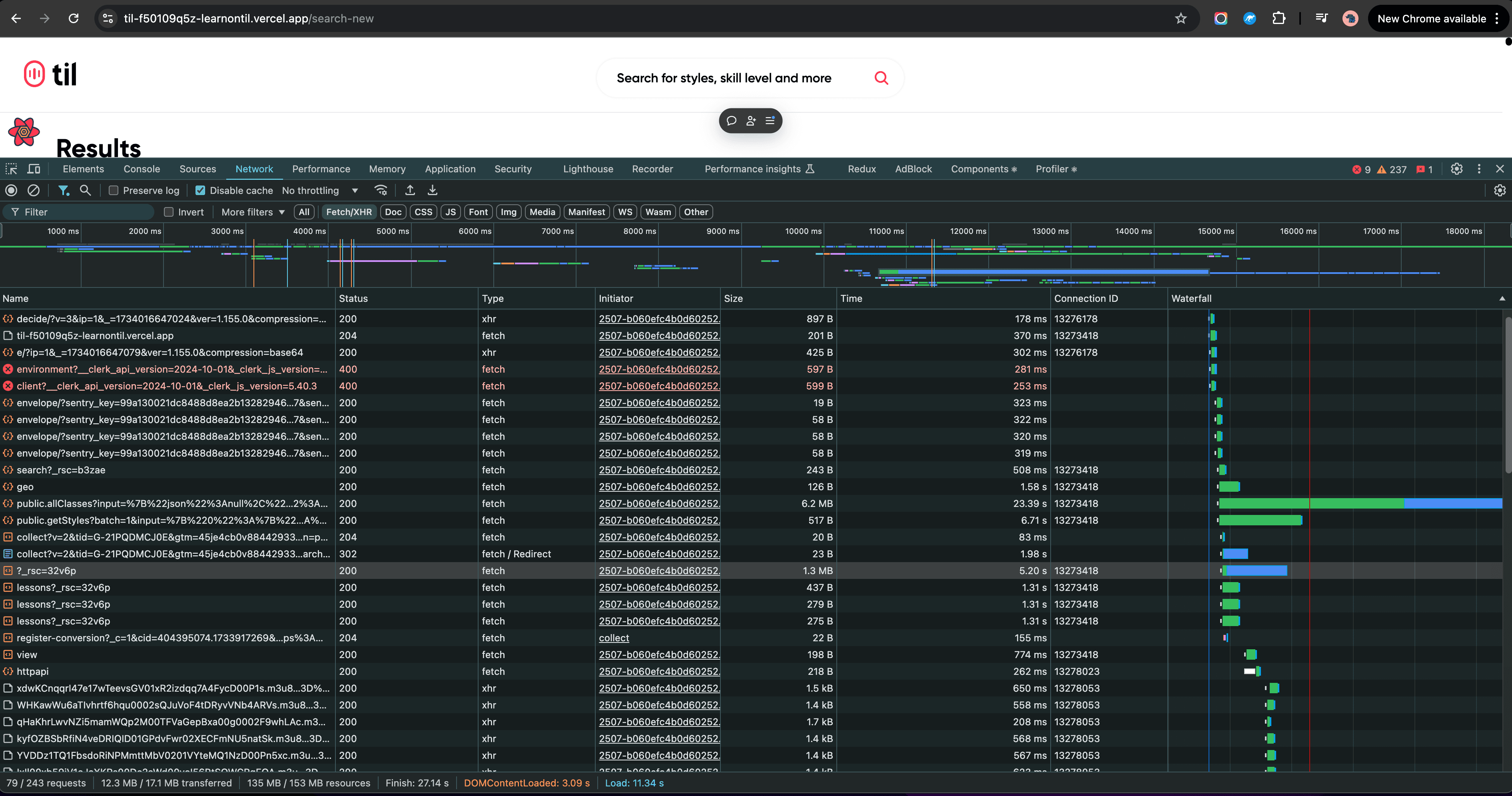

Taking a Step Back: Network Debugging

After trying several quick fixes and seeing minimal improvement, I realized I needed to go back to basics. I ran performance audits, made targeted optimizations based on those results, and ran the audits again—only to see marginal gains.

The key metrics I was tracking were discouraging:

- DOMContentLoaded: 3.09s (when the HTML is fully loaded)

- Page Load: 11.34s (when all resources are fully loaded)

These numbers were far from our target of 2-3 seconds for full interactivity, and my usual optimization tricks weren't making a meaningful difference.

It was time to take a completely different approach. I spent an evening watching Chrome DevTools tutorials and performance debugging videos, determined to understand what was happening at a fundamental level. Instead of making educated guesses, I decided to systematically work my way up from first principles.

This methodical approach would prove crucial in what happened next.

Eureka! The Hidden Culprit

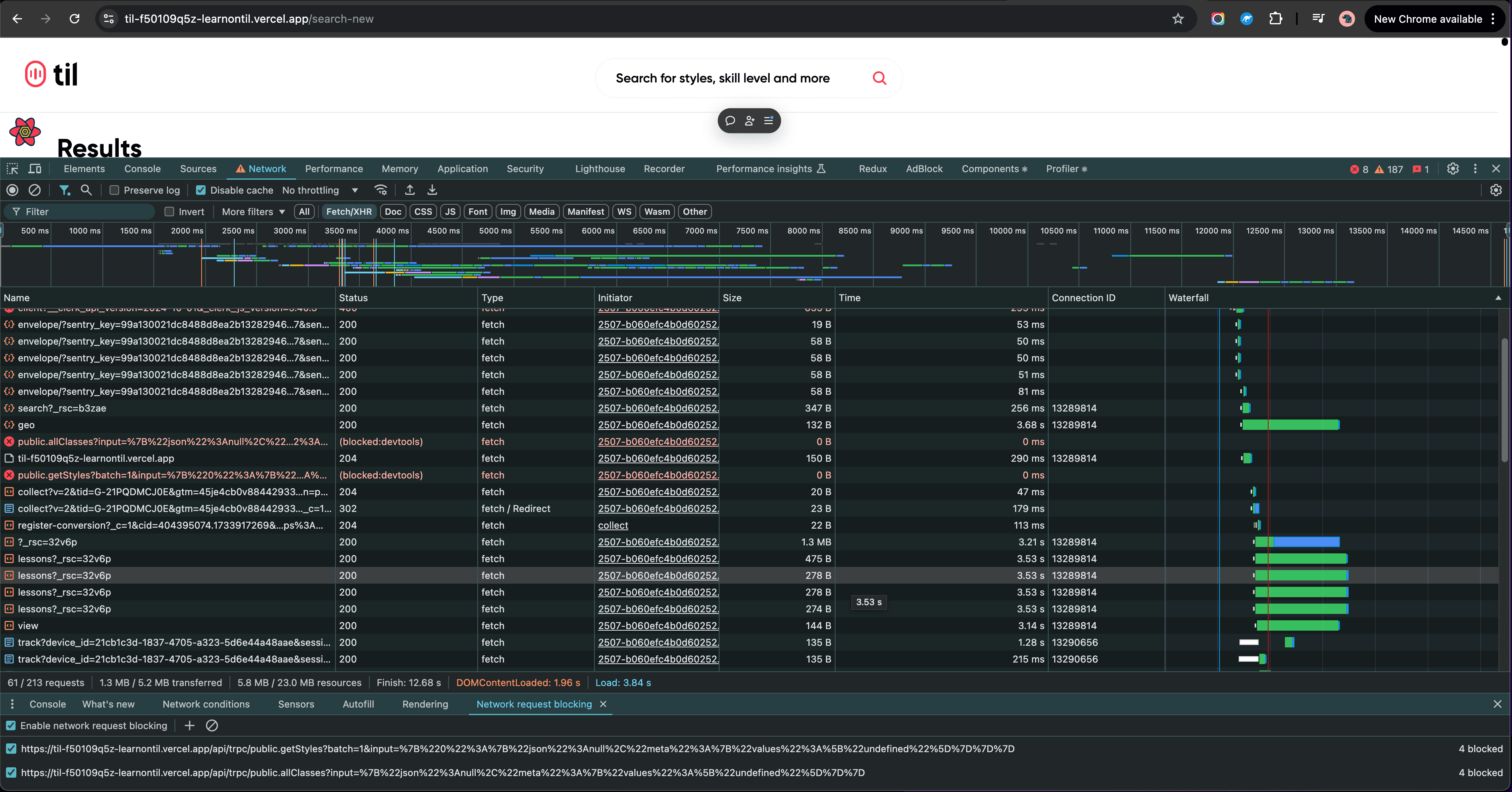

After familiarizing myself with advanced Chrome DevTools techniques, I discovered the "Block Requests" feature—which became the turning point in my investigation.

This feature allowed me to systematically disable different network requests to isolate their impact on performance. What I discovered was both surprising and frustrating.

Our navbar component was querying two completely unnecessary endpoints:

/trpc/public.allClasses/trpc/public.getAllStyles

These weren't just any queries—they were severely underoptimized legacy endpoints from an earlier version of our app and carried significant overhead, effectively loading our entire lesson catalog multiple times on every page load!

When I blocked just these redundant requests, our metrics improved dramatically:

- DOMContentLoaded: dropped from 3.09s to 1.96s

- Page Load: dropped from 11.34s to 3.84s (a 66% improvement!)

Further Video Optimization

Even with our core performance issues resolved, we needed to further optimize video loading. Despite Mux's lazy-loading capabilities, having dozens of video players ready to initialize was still causing network congestion.

During a review, our CEO asked a simple but insightful question: "How does TikTok do it? Do they load all the videos at once?"

This prompted me to inspect TikTok's explore page more carefully. I discovered they don't even render video players in the DOM until a user hovers over a thumbnail—they show optimized static images instead.

Taking inspiration from this approach, we implemented a similar strategy:

function VideoCard({ lesson }) {

const [isHovering, setIsHovering] = useState(false)

return (

<div

onMouseEnter={() => setIsHovering(true)}

onMouseLeave={() => setIsHovering(false)}

>

{isHovering ? (

<MuxPlayer

streamType="on-demand"

playbackId={lesson.playbackId}

metadata={{

video_title: lesson.title,

player_name: 'Til Search Player',

}}

/>

) : (

<img

src={`https://image.mux.com/${lesson.playbackId}/thumbnail.jpg`}

alt={lesson.title}

loading="lazy"

/>

)}

</div>

)

}

This approach dramatically reduced initial network load and created a much smoother browsing experience. Sometimes the best solutions come from looking at how larger companies have solved similar problems.

The Mysterious Echo

During testing, I encountered a strange issue—when hovering over thumbnails, I could hear an echo, as if the video's audio track was playing twice.

We were already building a small demo app to share with Mux's support team due to some other integration challenges we were facing. I added this new echo issue to the demo and included a link to our preview branch for them to investigate.

Their response surprised me: "We can see two player elements in the DOM when you hover."

After diving into our component, I realized we were conditionally hiding the video player with CSS rather than removing it from the DOM:

// 🚩 Problematic code

function ResponsiveVideoCard({ lesson }) {

return (

<>

<div className="hidden md:block">

<VideoCard lesson={lesson} />

</div>

<div className="block md:hidden">

<MobileVideoCard lesson={lesson} />

</div>

</>

)

}

When users hovered on desktop, both the desktop and (hidden) mobile players were initializing and playing simultaneously! The CSS was hiding the element visually, but both video players remained active in the DOM. Switching to conditional rendering fixed the issue:

// ✅ Fixed code

function ResponsiveVideoCard({ lesson }) {

const isMobile = useMediaQuery('(max-width: 768px)')

return (

<>

{!isMobile ? (

<VideoCard lesson={lesson} />

) : (

<MobileVideoCard lesson={lesson} />

)}

</>

)

}

This was a valuable reminder that CSS-based responsive design techniques like hidden classes don't actually remove elements from the DOM. For complex components like video players, it's often better to use JavaScript to conditionally render elements rather than simply hiding them with CSS.

Key Learnings

This project transformed my approach to performance optimization and architecture in several fundamental ways:

The Art of Performance Debugging

Technical problems rarely announce themselves clearly. The most valuable skill I developed was a methodical approach to performance debugging—isolating variables, questioning assumptions, and letting data guide decisions rather than intuition.

Using Chrome DevTools' "Block Requests" feature strategically proved more valuable than a dozen optimizations based on assumptions. When tackling performance, a systematic, evidence-based approach beats guesswork every time.

Architectural Boundaries Matter

The most insidious performance issues often lurk at the boundaries between systems. Our biggest bottleneck wasn't in the new search architecture but in how it interacted with existing components—a lesson in holistic system thinking that's influenced how I approach architecture ever since.

Inspiration Is Everywhere

TikTok's approach to video loading provided the blueprint for our most effective UX optimization. There's immense value in studying how industry leaders have solved similar technical challenges, especially for common user experience patterns.

Implementation Details Can Make or Break UX

The echo bug taught me that even subtle implementation choices—like using CSS hiding versus conditional rendering—can have outsized impacts on user experience. These details matter tremendously, especially for resource-intensive components.

The Results

The final metrics speak for themselves:

- 66% faster page load: from 11.3s to 3.8s

- 37% faster interactivity: DOMContentLoaded improved from 3.1s to 1.9s

- Zero-latency filtering: filtering now happens entirely client-side

- Smoother video experience: videos load on demand rather than all at once

More importantly, user engagement with our search page increased dramatically after the relaunch, with longer session durations and higher conversion rates to lesson enrollments.

Final Thoughts

This project taught me that thoughtful architecture is just the beginning—equally important is understanding how systems actually interact in production. While I had meticulously designed our approach, it was only through challenging my assumptions and investigating cross-component interactions that I uncovered the real performance bottleneck.

The most valuable insight wasn't technical—it was that sometimes the biggest performance wins come from challenging assumptions and approaching problems with fresh eyes. In the rush to adopt the latest architectural patterns, it's easy to overlook fundamentals like redundant network requests or inefficient DOM manipulation.

This project reinforced a core truth of software engineering: the gap between a working system and an exceptional one often lies not in following trends, but in the relentless pursuit of understanding—truly understanding—how your code behaves as a whole. That pursuit is what transforms good engineers into exceptional ones, and it's a journey I'm still very much on.